Verbal Cues and Stereotypes

December 8, 2016

Recently, in response to an email message from a male acquaintance, I replied using the word "cute." His message contained a funny,

photoshopped image; and, while it made me

laugh, it didn't demand more than this one word acknowledgement. Just a few hours after that, I read a

press release entitled, "Real men don't say 'cute'."[1] I decided to restore my manhood by using a modern

tool that's

mightier than a sword, a

desktop computer, to write this article.

It's generally easy to identify an

educated person by his/her

vocabulary. The original purpose of the vocabulary portion of the

SAT test and other such tests was to assess how much a student has

read as a measure of his

intelligence. A

diligent student will read many books, and he will find the definition of words he doesn't understand. This portion of the test was subverted over the years into a word

memorization activity. Interestingly, a high score from successful memorization is also a good

predictor of

academic success, since the activity requires both intelligence and

perseverance.

Aside from the level of education, what else might be gleaned from a person's speech? I've often been able to determine whether an

author is

male or

female from their use of

language; but, is such

stereotyping more

accurate than not? An international team of

researchers, including one from the interestingly named, "

Ubiquitous Knowledge Processing Lab," looked into this problem. Team members were from the

University of Pennsylvania (Philadelphia, Pennsylvania), the

Technische Universität Darmstadt (Darmstadt, Germany), and the

University of Melbourne (Victoria, Australia).[2]

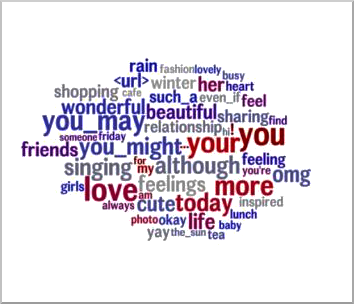

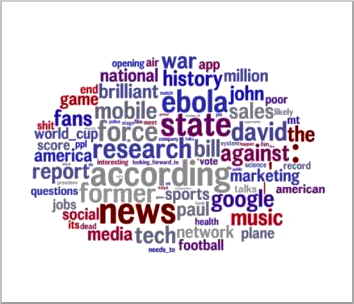

The research team, composed of

social psychologists and

computer scientists, used publicly available

Twitter messages (tweets) to

analyze the accuracy of stereotypes.[1] They used the

artificial intelligence technique of

natural language processing (NLP) to show that the spectrum of stereotype accuracy ranges from

plausible to wrong.[1] NLP strives to create an automatic understanding of written language, and examples of NLP include the

Siri intelligent personal assistant, and applications such as

spell checking and

predictive text.[1]

Participants in this

psychological study were asked to categorize

authors solely on the contents of their tweets. They identified the actual characteristics of 3,000

Twitter users and divided them into the following categories: male/female;

liberal/

conservative; younger/older; and no

college degree/college degree/

advanced degree.[2,3] A hundred of each Twitter user's tweets,

randomly selected from all tweets authored in the course of a year, were placed on the

Amazon Mechanical Turk crowd-sourcing website, where

Internet users were paid two

cents every time they matched a tweet to a category.[2,3]

The NLP

methodology went beyond the way stereotype research had been done in the past, since it started with the

behaviors and asked about identity, rather than start with a group and ask about behaviors.[1] In this way, NLP allowed insight into a person's stereotypes without asking them to explicitly state them. Says lead author,

Jordan Carpenter,

"This is a novel way around the problem that people often resist openly stating their stereotypes, either because they want to present themselves as unbiased or because they're not consciously aware of all the stereotypes they use."[1]

When the

guesses were about

age,

gender, or

politics, they were better than

chance.[3] Guesses for education level, however, were worse than chance, possibly because there were three choices in that category rather than two. Gender guesses were 75% correct,[3] affirming my ability to accurately guess whether a writer was male or female. It seems that people think that highly-educated people never use the

f-word, and this contributes to the errors in guessing education (I don't use the f-word, except when

talking to myself). Says Carpenter,

"...People had a decent idea that people who didn't go to college are more likely to swear than people with PhDs, but they thought PhDs never swear, which is untrue."[1]

While it is true that men author more

technology related tweets than women, stereotyping resulted in the misconception that

all technology tweeters were men.[1] Also, feminine-sounding people were assumed to be liberal, while masculine-sounding people were thought to be conservative.[1] It's interesting to think of how this

information might be applied. Would articles about the validity of

global warming convince conservatives if they were written in a highly masculine tone?

References:

- Real Men Don't Say "Cute," Society for Personality and Social Psychology Press Release, November 15, 2016.

- Jordan Carpenter, Daniel Preotiuc-Pietro, Lucie Flekova, Salvatore Giorgi, Courtney Hagan, Margaret L. Kern, Anneke E. K. Buffone, Lyle Ungar, and Martin E. P. Seligman, "Real Men Don’t Say "Cute" - Using Automatic Language Analysis to Isolate Inaccurate Aspects of Stereotypes," Social Psychological and Personality Science, Advanced Online Publication, November 15, 2016, doi: 10.1177/1948550616671998.

- Karl Leif Bates, "Would You Expect a 'Real Man' to Tweet 'Cute' or Not?" Duke Research Blog, November 16th, 2016.