Simulating Galaxy Formation

December 10, 2015 General purpose computers, such as desktop computers and most mainframe computers, are useful because they are general purpose; that is, they'll do nearly everything you want them to do, from printing paychecks, keeping your calendar, and calculating pi to millions of decimal places. Although computing hardware has become considerably faster through the decades, it's always slower than desired for certain applications. For that reason, special-purpose computers are built that are very good at doing just one, specific calculation. Alan Turing's Bombe, an electromechanical computer used for cryptanalysis during World War II, is an early example of such a special-purpose computer. I'm certain that government agencies have modern equivalents of this type of device for their own code-breaking efforts. Bitcoin mining is another application for special-purpose computers. Today's special purpose computers are constructed from logic array integrated circuits known as field-programmable gate arrays (FPGAs) and application-specific integrated circuits (ASICs). Many years ago I met a scientist who was building a special-purpose computer with such chips to simulate the formation and evolution of galaxies. Since these were the dark days before astronomers discovered the importance of dark matter and dark energy in such simulations, the computations were simpler than they would be today. Advances in technology have given us supercomputers that perform nearly as well as special-purpose computers for most simulations. Fast, multi-core CPUs, the use of graphics processing units (GPUs), and massively parallel processing have boosted the performance of supercomputers. Of equal importance is the availability of fast and dense memory. Oak Ridge National Laboratory (ORNL) has one of the world's most powerful supercomputers. This supercomputer, appropriately called Titan, has a theoretical peak performance of more than 20 petaflops; that is, 20 quadrillion floating point calculations per second. The ORNL web site describes this computation performance as equivalent to the world's 7 billion people doing 3 million calculations per second. I wrote about Titan in a previous article (Titan Supercomputer, November 19, 2012). | The ORNL Titan supercomputer. Titan is built from AMD Opteron CPUs and Nvidia Tesla GPUs, and it runs a variant of Linux. (ORNL image.) |

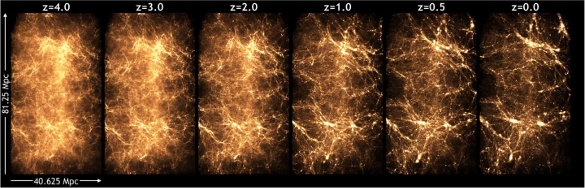

"Gravity acts on the dark matter, which begins to clump more and more, and in the clumps, galaxies form."[2]The Q Continuum simulation was run using the Hardware/Hybrid Accelerated Cosmology Code (HACC) software written in 2008. HACC was written to be adaptable to different supercomputer architectures.[2] The simulation generated two and a half petabytes of data, and such a data lode can't be analyzed quickly. The data analysis will take several years, and it's expected to generate insights into many astrophysical phenomena.[2] As Heitmann explains,

"We can use this data to look at why galaxies clump this way, as well as the fundamental physics of structure formation itself."[2]

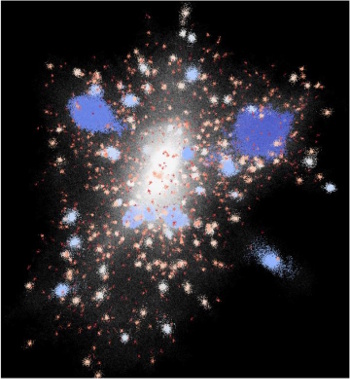

| Galaxies with surrounding halos, as calculated by the Q Continuum simulation. Sub-halos are shown by different colors. (DOE/Argonne National Laboratory image by Heitmann et. al..) |

|

| Galaxy formation, as revealed by the the Q Continuum simulation. The matter in the universe is at first distributed uniformly. After a time, gravity acts on dark matter to clump the matter into galaxies. (DOE/Argonne National Laboratory image by Heitmann et. al. Click for larger image.) |

References:

- Katrin Heitmann, Nicholas Frontiere, Chris Sewell, Salman Habib, Adrian Pope, Hal Finkel, Silvio Rizzi, Joe Insley, and Suman Bhattacharya, "The Q Continuum Simulation: Harnessing the Power of GPU Accelerated Supercomputers," The Astrophysical Journal Supplement Series, vol. 219, no. 2 (August 21, 2015), dx.doi.org/10.1088/0067-0049/219/2/34.

- Louise Lerner, "Researchers model birth of universe in one of largest cosmological simulations ever run," Argonne National Laboratory Press Release, October 29, 2015.