Computation Energetics

January 31, 2012 Back in the early days of personal computers, when software was slow to load and sometimes slow in executing its specific functions, I would be selective about what application I would use for a particular task. I used notepad a lot when I was working with simple text files, since word processing programs took too long to load. What never crossed my mind was the fact that my using the simplest program to accomplish a task actually saved electrical energy. Until about a decade ago, most computers weren't battery powered, so you didn't think that much about the energy involved in computation. If you were ecologically-minded, you did do one thing, and that was to enable power-down of components when the computer was idle for fifteen minutes, or so. My Linux system has a Power Management tab under its system preferences menu, and I'm sure that other operating systems have a similar capability. Moore's Law, that the number of transistors in the densest integrated circuits doubles every eighteen months, is only possible because of a concurrent reduction in the power requirement for operating those transistors. When I was in graduate school, I was introduced to the "hairy, smoking golf ball" version of computing. At that time, in the early 1970s, more powerful computers were projected to be golf ball sized, to get around the finite speed of light; they would seem to be hairy, because of all the wires needed to interface to the outside world; and they would be smoking, because of the required operating power confined to such a small volume. Engineers have definitely been working towards energy-efficient computing, as revealed in Koomey's Law. This law, as I discussed in a previous article (Koomey's Law, October 20, 2011), states that the number of computations per unit energy has doubled about every eighteen months. This eighteen month cycle is apparent from the vacuum tube days of 1950s computers, into transistorized computing, and now for integrated circuits. Hardware isn't the only parameter affecting energy consumption. A CPU doing extensive calculations will also crank up the watts. I experienced this phenomenon first hand when a high performance server I was using to compile a large piece of code went into thermal shutdown in the middle of the process. An internal cooling duct had shifted, so the CPU wasn't getting its fair share of air, and my compilation put it over the edge. Monitoring energy usage is actually a method of discovering vulnerabilities in protected embedded system firmware by a side channel attack. For example, the closer your password is to the true value, the less power the device might consume in comparing it against the actual password. We've long passed the point at which battery-powered computing devices outnumber desktop computers, so energy efficiency in both hardware and software is necessary. Of course, programmers recognize a few obvious things they can do, like activating power-hungry GPS functions only when needed, and for as short a time as possible when they are needed. However, when choosing one video codec over another, it's not always obvious which will use less energy. Kathryn McKinley, a professor of computer science at the University of Texas at Austin, along with Stephen M. Blackburn of the Australian National University and their graduate students, are systematically measuring and analyzing the energy-efficiency of software on a variety of hardware. Their study is important not just for mobile computing, but for the power-hungry datacenters that are expanding worldwide.[3] Owners of these data centers are working to make them more energy-efficient. Hardware has become inexpensive, but the power to run the hardware, along with the required cooling systems, has become expensive. Intel, noting the importance of monitoring the energy requirements for computation, now provides The Intel® Energy Checker SDK, a software application that measures the productivity of a system and compares it against its energy consumption. Says McKinley,"In the past, we optimized only for performance... If you were picking between two software algorithms, or chips, or devices, you picked the faster one. You didn't worry about how much power it was drawing from the wall socket. There are still many situations today - for example, if you are making software for stock market traders - where speed is going to be the only consideration. But there are a lot of other areas where you really want to consider the power usage."[3]As an analog of the Energy Star program for software, McKinley thinks that applications should be marked with an energy consumption figure so a consumer can decide whether or not she wants to add it to her smartphone.

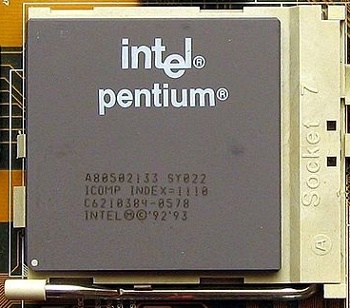

| Computing nostalgia The first Pentium (P5) chip had about three million transistors operating from, at that time, a conventional five volt supply. High voltages lead to high power consumption, so present day CPU voltages are as low as one volt, and some use dynamic voltage scaling (Via Wikimedia Commons). |

References:

- Jonathan Koomey, Stephen Berard, Marla Sanchez and Henry Wong, "Implications of Historical Trends in the Electrical Efficiency of Computing," IEEE Annals of the History of Computing, vol. 33, no. 3 (July-September 2011), pp. 46-54.

- Kate Greene, "A New and Improved Moore's Law," Technology Review, September 12, 2011.

- Daniel Oppenheimer, "A big leap toward lowering the power consumption of microprocessors," University of Texas at Austin Press Release, January 20, 2012.

- Kate Greene, "A New and Improved Moore's Law," Technology Review, September 12, 2011.